Hacker News is Obsessed with Aviation: Classifying 42 Million Posts with SLMs

A Quick Primer

As an engineer and private pilot, I often find myself enjoying the surprisingly high volume of aviation-related content on Hacker News. About a year and a half ago, I started wondering if language models could be used to answer the question of just how much of Hacker News pertains to aviation.

SLMs

I started pondering this question around the same time that LLMs became popular (2023). As a data practioner, they seemed like a great tool to perform nuanced classification tasks in scenarios lacking a labeled ground-truth dataset. But the larger models were too expensive and tooling was too unwieldy to use them for offline data processing tasks.

In general, language models are an abstractions for data practitioners, allowing us to easily perform on-the-fly unstructured data analysis without the pains that come with traditional ML, even if it means at a higher cost (a data scientist/ML engineer's time is almost inarguably more valuable!)

And increasingly so today, smaller pre-trained models are getting more performant and customizable -- and compute costs to run them keep decreasing. Inference cost viability is not nearly as much of a concern as appropriate tooling.

Down the Rabbit Hole

At Skysight, we've spent much of the past year optimizing an end-to-end tooling layer to solve these types of data-and-compute-intensive problems. We will have more specifics on that later, but feel free to reach out if you are looking for infrastructure to solve similar problems.

The Pipeline

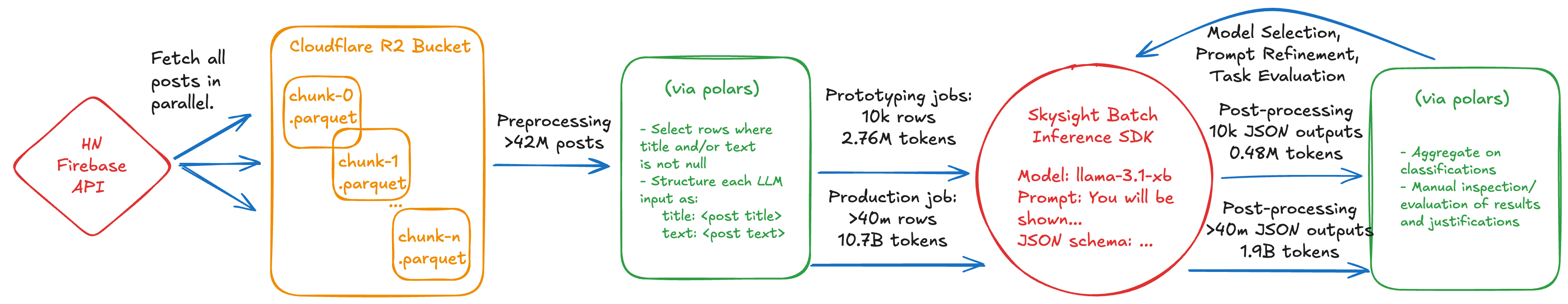

Without further adieu, we'll explain the pipeline we used to perform our analysis (visualized at the top).

Data Gathering and LLM Pre-Processing:

Hacker News offers a free API that can be used to gather all historical posts. We highly parallelized the fetching of this data and stuck the contents in a Cloudflare R2 Bucket distributed across >900 Parquet files. This left us with about 42 million items in total. (Let us know if you would like access to this raw data!)

We then wanted to make the posts maximally useful to a language model. To do so, we concatenated each post's title and text (dropping posts that have neither) so as to provide enough detail but not overwhelm the model by displaying too much irrelevant information. We could have added more context to the input by including post comments/ancestors, other posts from the same user, or some of the user's profile data.

r2_client = boto3.client('s3', endpoint_url=os.environ['R2_ENDPOINT_URL'], aws_access_key_id=os.environ['R2_ACCESS_KEY_ID'], aws_secret_access_key=os.environ['R2_SECRET_ACCESS_KEY'], region_name='wnam')

objects = r2_client.list_objects(Bucket='<bucket-name>', Prefix='hackernews/items/')

first_object = objects['Contents'][0]

object = r2_client.get_object(Bucket='<bucket-name>', Key=first_object['Key'])

df = pl.read_parquet(object['Body'])

df = df.filter(pl.col("title").is_not_null() | pl.col("text").is_not_null())

def create_prompt(df):

df = df.with_columns(

pl.concat_str([

pl.lit(" Title: "),

pl.col("title").fill_null("None"),

pl.lit(" Text: "),

pl.col("text").fill_null("None")

], separator=" ")

.alias("input")

)

return df

df = df.head(10000) # for prototyping

df = create_prompt(df)

df = df.select(pl.col("id"), pl.col("input"))

Model Selection + Prompt Prototyping

After pre-processing, we took the first 10,000 rows to do some prototyping work including model selection, prompt engineering, and structured decoding decisions.

Our system is designed to make fast prototyping simple and cost-effective, so each cycle of iteration only took a minute or so and several cents to run. Instead of formal evaluations (we will cover those in later posts), we manually inspected results to steer towards a "good-enough" solution.

system_prompt = """

You will be shown a hacker news post's title and text (sometimes there is no text). Your job is to classify whether the post is related to aviation. It must explicitly, directly be related to aviation. If it is related to aviation, return True, otherwise return False. Provide a justification for your answer.

An example might be Title: "The Future of Aviation" Text: "The future of aviation is electric. We will see more electric airplanes in the future." This post is related to aviation, so the answer is True.

Another example might be Title: "Haskell is the best programming language" Text: None. This post is not related to aviation, so the answer is False.

"""

json_schema = {

"type": "object",

"properties": {

"is_aviation_related": {"type": "boolean"},

"justification": {"type": "string"}

},

"required": ["is_aviation_related", "justification"]

}

results = infer(df, column="input", system_prompt=system_prompt, model="llama-3.1-8b", job_priority=0, json_schema=json_schema)

df = pl.DataFrame(results)

true_rows = df.filter(pl.col("outputs").str.json_decode().struct.field("is_aviation_related"))

for row in true_rows.iter_rows(named=True):

print(row['outputs'])

print(row['inputs'])

print("---")

Upon visual inspection, the results are pretty strong, with a high-level of true-positives, and few false-postives (we will discuss false negatives later). Some stories it picked out include:

- The Wright Brothers: They Began a New Era

- Is Drone Racing Legal?

- Largest plane in the world to perform test flights in 2016

- NASA is Crash-testing Planes (videos)

- Autonomous flying car to become reality by 2025

- Lexus hoverboard in motion

- 'Son of Concorde' Could Fly London to New York in an Hour

- NASA rule of thumb I read somewhere.<p>"Test what you fly. Fly what you test."

- Drones are the new laser pointers :)

- I wonder if someone will sell a product that takes control of hovering drones and lands them

without breaking them.

Some false positives include:

- Erle-Spider Is a Six-Legged Drone Powered by Snappy Ubuntu Core

- It seems like it might make sense to have a detachable (gas) engine.

If you could remove it for your city driving, you'd save weight (== increased mileage). Then

you'd fit it back only for the long trips. Not sure how practical such an arrangement would be.

Scaling Up

Once we settled on our final model selection, system prompt, and output schema, we scaled up to the full sized run. After filtering for non-null stories, we wound up with about 40 million total stories to analyze, weighing a whopping 10.7 billion input tokens. Nothing to sneeze at!

Our system is designed to handle such workloads - which completed inference in a couple of hours and generated an additional 1.9 billion output tokens, neatly structured as JSON strings for easy parsing.

Our Findings

In aggregate, 249,847 of the ~40.5 million posts were classified as being related to aviation, representing roughly 0.62% of Hacker News. However, of the nearly 5 million total posts (not comments or other story types), 1.13% are aviation related! That means there is roughly a 29% chance of the HN top stories containing a post related to aviation at any given time (feel free to check my math here). No wonder they seem so abundant!

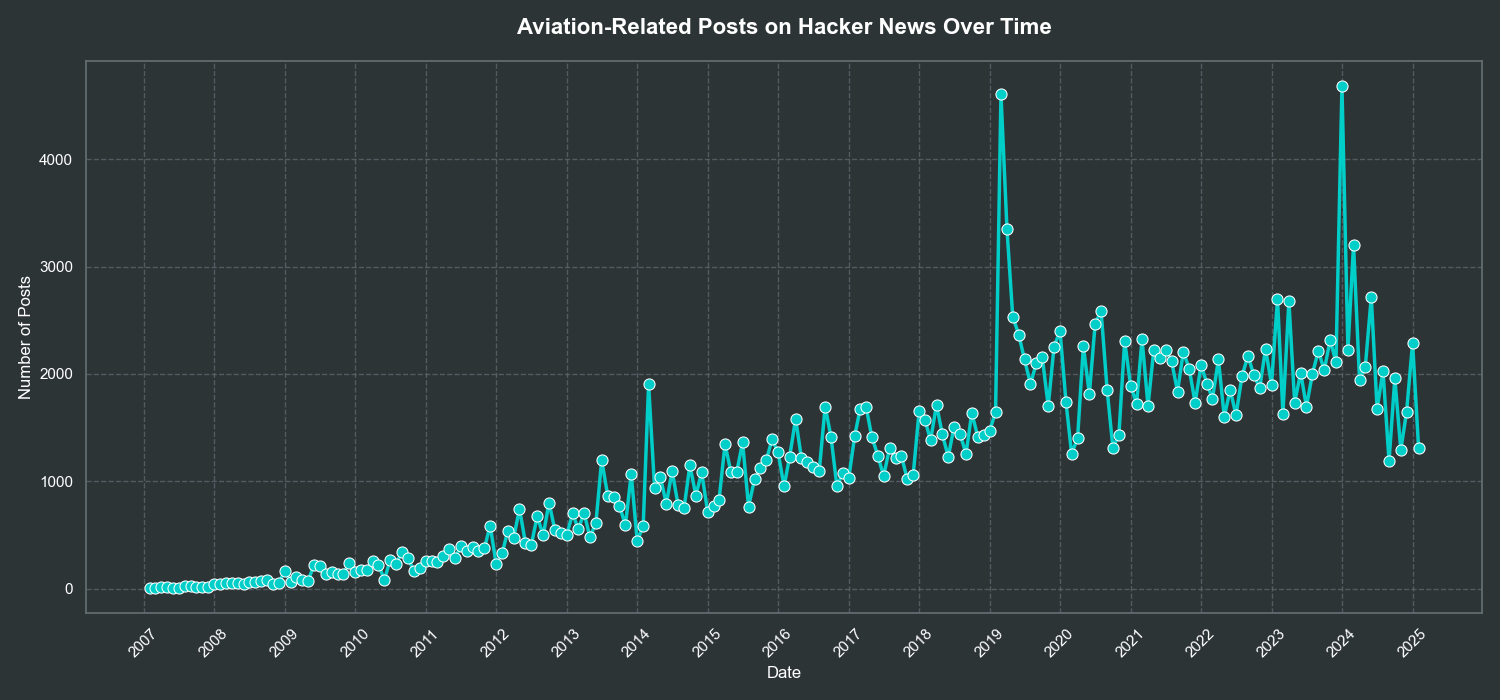

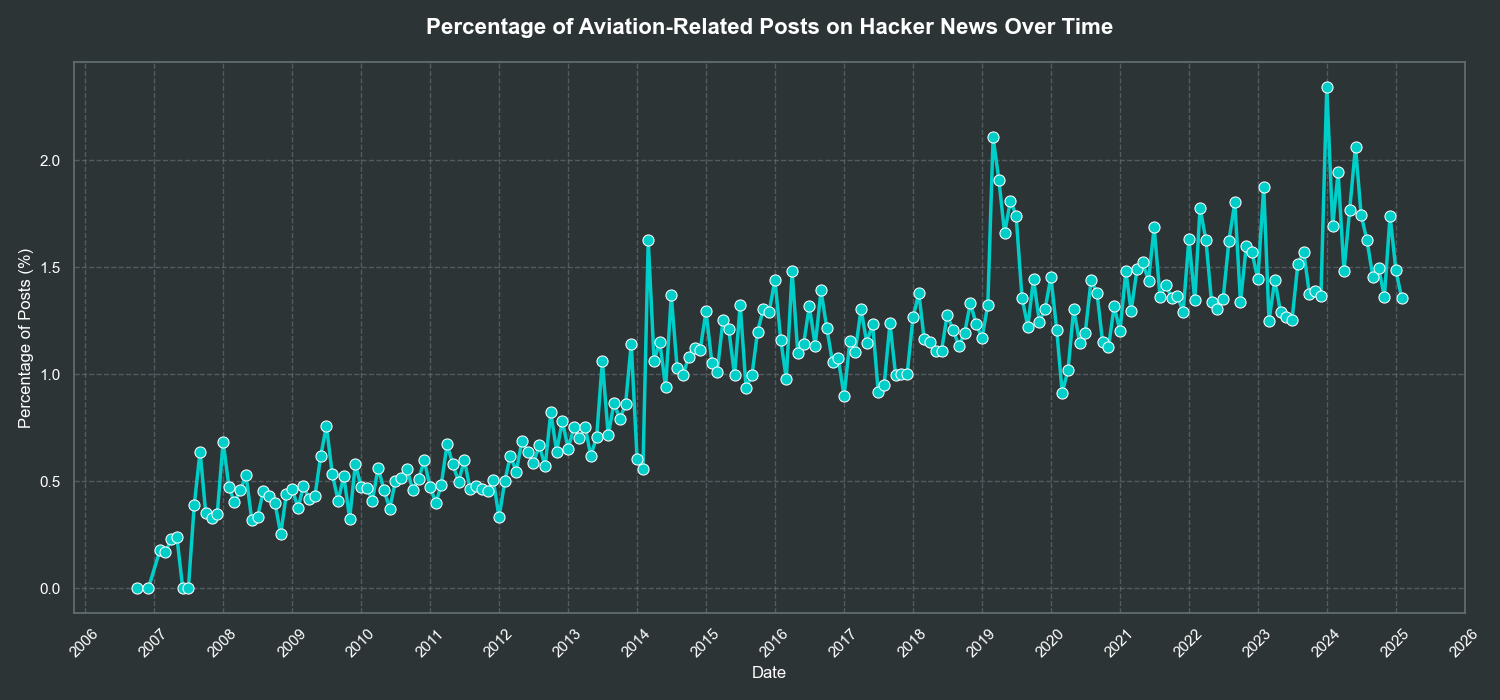

When we look at the frequency of aviation-related posts over time, we get some fascinating results as well. Digging in more closely, the two major spikes we observe occur in March of 2019 when the second 737 MAX crash took place, and in January of 2024 when the Alaska Airlines flight experienced the midflight door loss incident.

Perhaps even more interestingly, the overall percentage of aviation-related posts seems to continue to increase over time, hovering around 0.5% from 2007 to 2012, and stabilizing around 1.5% in the last four years. Is it becoming a greater intellectual interest amongst technologists, are there more technology developments in the space, have there been more noteworthy incidents, or is it something else entirely?

And as a final "analysis", I'd like to shout out the top 30 overall contributors to the aviation-centric content on HN (by post/comment frequncy)! Thank you to: rbanffy, WalterBright, sokoloff, JumpCrisscross, nradov, belter, mikeash, Animats, ceejayoz, jacquesm, PaulHoule, ChuckMcM, sandworm101, Retric, bookofjoe, jonbaer, Tomte, pseudolus, prostoalex, Robotbeat, Gravityloss, avmich, FabHK, dredmorbius, toomuchtodo, mannykannot, walrus01, InclinedPlane, dingaling, TeMPOraL, panick21_, and LinuxBender.

Room for Improvement

As mentioned earlier, this analysis was relatively "hand-wavy" with the lack of statistical evaluation and rigor performed. It was primarily meant to be a first pass demonstration using pre-trained language models on the scale of a dataset like Hacker News. Naturally, this creates some blindspots in the analysis like the discounting of false-positives and accounting for the rate of false-negatives.

In future posts, we will dig into ways to more rigorously perform language models evaluations in the context of batch inference. We will also cover additional methods such as emsemble modeling, distillation, and adversarial approaches to improve overall effectiveness - perhaps revisiting this topic again!

That being said, I was thoroughly impressed at the capabilities of a small, pre-trained model to accurately perform classifications on this dataset, and provide the cost flexibility to run this analysis quickly and inexpensively. It is hard to argue with the insights the model was able to help us generate without the need for any specialized ML engineering or data-labeling work, and what profound use cases this will unlock in the near future.

Get in Touch

If you have a similar batch inference use-case you would like to discuss with us or get early access to our products, email us at team@skysight.inc. We are also hiring engineers and researchers to help us optimize large-scale inference systems. If you have an interest in chatting about job opportunities with us, send an email to jobs@skysight.inc.