Generating 1 Million Synthetic Humans - a New Method for Seeding Diverse LLM Outputs

Context

Among the many use cases for batch LLM inference, synthetic data is one we are especially excited about at Skysight.

Unfortunately, useful synthetic data doesn't come out of the box with LLMs: you can't just ask an LLM to generate a thousand outputs by giving it the same prompt a thousand times with a high temperature setting.

In our first blog post, we approached this issue simply by applying different combinations of domains, difficulties, and languages, and industries - then "mad-libbing" them into prompts. We realized we can do a lot better by creating statistically representative prompt seeds.

The Intuition

In traditional numerical machine learning, you can accomplish heterogeneity (or reproducibility) purely with numerical seeds. But with language models, you need to seed such diversity with (as one might expect) - language! And if you are looking to create diversity that models some real-world data, you can't just generate random language. You need to generate textual seeds that approach a statistical representation of the relevant features of that data.

We think a pretty good, generalizable and reusable seed is a sample over the human population, since LLMs are generally just reproducing human-generated content. Such a seed can allow an LLM to "think" and speak from the lens of a multitude of different human perspectives. Perhaps you've already tried this on a small scale with ChatGPT or similar (e.g. what would Larry David say to X question?) That's fun, but we think it gets far more interesting on a population level.

Of course, this isn't the only seed (or even an appropriate seed) for many use cases, but we think the approach is generally useful.

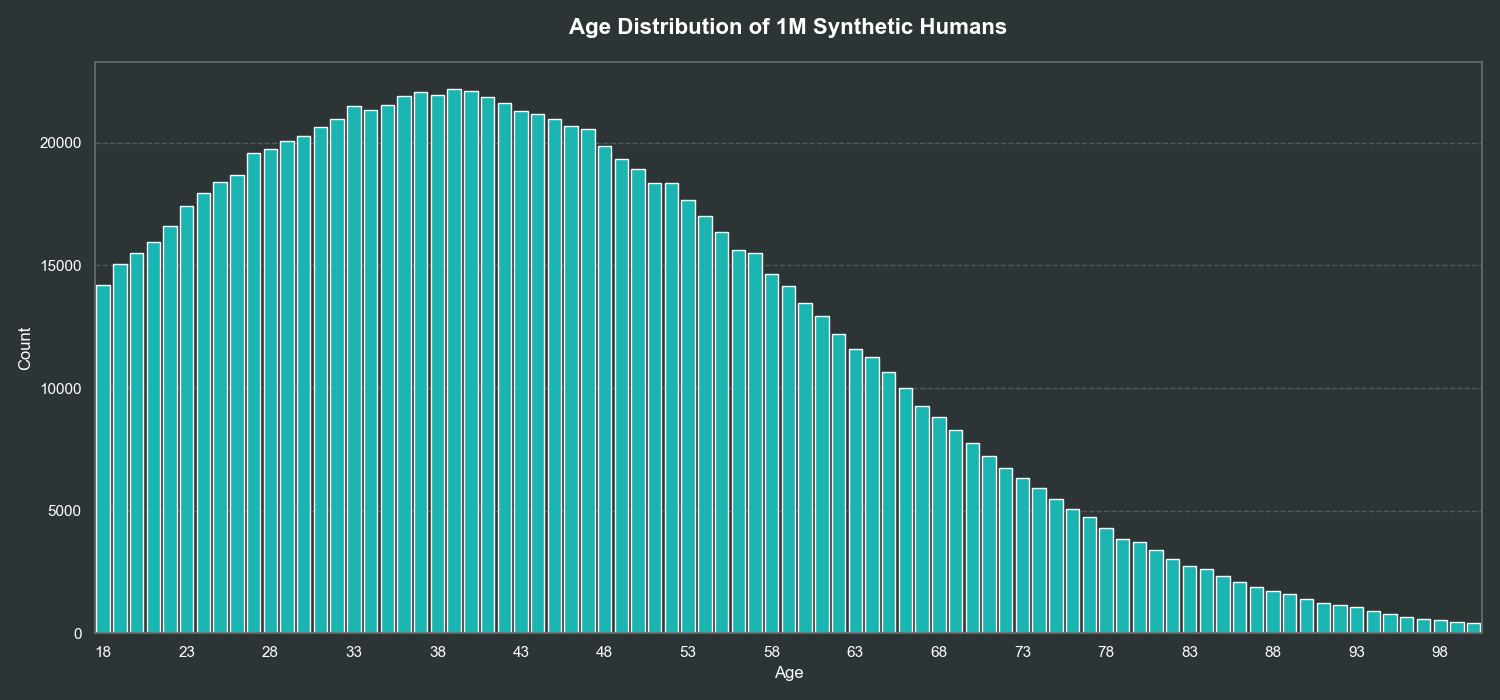

Sampling from the (US) Population

We decided to keep things relatively simple for a first go, and attempted to model US demographics. We used a handful of starting attributes:

- age

- gender

- location (city and state)

- employment category

- median annual wage

For the first two attributes, we used the sample distribution found in the US Demographics Wikipedia Page, and used a minimum age of 18 and a maximum age of 100. For location data, we used Census Data, appropriately weighted based on populations. For employment category and annual wage data, we used data from the US Bureau of Labor Statistics, again weighted by worker counts in each category, but this time coupled together (wages corresponding each employment category).

This produces samples like:

{

"id": "syn-QmFqR87uc6",

"age": 43,

"gender": "Female",

"location": "Millville, Utah",

"occupation_category": "Home Health and Personal Care Aides",

"annual_wage": 33530.0

}

{

"id": "syn-iNdIevVGrL",

"age": 31,

"gender": "Male",

"location":

"West St. Paul, Minnesota",

"occupation_category":

"Dental Hygienists", "annual_wage": 87530.0

}

{

"id": "syn-gUmdsFEF3m",

"age": 25,

"gender": "Male",

"location": "New York, New York",

"occupation_category": "Software Developers",

"annual_wage": 132270.0

}

While seemingly narrow, this produces an enormous amount of diversity and relative "realism" on a population level. We can comfortably sample a million times without too much worry of significant overlap given the raw number of combinations.

Mad (LLM) Science

With these samples, we can have an LLM do the rest of the creative work to generate truly diverse human narratives. We used the following system prompt, and a strong reasoning model (Qwen/QwQ-32B) to accomplish this. Note that we also did use random numerical seeds for each input, further ensuring uniqueness of responses. We used a fairly standard temperature of 0.6.

You are helping a company build a synthetic human dataset.

You will be shown a dictionary of attributes for a human. Your job is to take the list of attributes,

and generate a number of qualitatve descriptions for the human based on the attributes.

You must include the following qualitative descriptions:

- demographic_summary: a summary of the demographic of the human

- background_story: a detailed background story of the human

- daily_life: a detailed description of the human's daily life

- digital_behavior: a detailed description of the human's digital behavior

- financial_situation: a detailed description of the human's financial situation

- values_and_beliefs: a detailed description of the human's values and beliefs

- challenges: a detailed description of the human's challenges

- aspirations: a detailed description of the human's aspirations

- family_and_relationships: a detailed description of the human's family and relationships

- personality: a detailed description of the human's personality

- political_beliefs: a detailed description of the human's political beliefs

- education: a detailed description of the human's education

We then use a separate model to extract out each of the fields for easier consumption later on. We do this with a smaller model (meta-llama/Llama-3.1-8B-Instruct), and let a somewhat larger model (google/gemma-3-27b-it) handle more challenging cases.

This leaves us with demographic summaries like:

A 43-year-old female residing in Millville, Utah, a rural community known for its tight-knit social

fabric and conservative values. She works as a Home Health and Personal Care Aide, providing in-home

care to elderly and disabled individuals, earning an annual wage of $33,530. This places her in the

lower-middle-income bracket in Utah, where the median household income is higher, contributing to

financial constraints.

Financial situations like:

With a salary above the median for dental hygienists in Minnesota, he enjoys financial stability.

He and his spouse own a modest three-bedroom home in West St. Paul, which they purchased with a

30-year mortgage. Their combined income allows them to cover household expenses, childcare costs,

and occasional family vacations. However, they’re mindful of student loans from his associate’s

degree and her nursing program, which they’re actively paying down. He contributes to a 401(k)

and a college savings account for their child but occasionally feels stretched by unexpected

medical bills or home repairs. While not wealthy, he prioritizes long-term security over luxury

spending.

And education descriptions like:

He holds a Bachelor of Science in Computer Science from a public university, where he excelled in

courses on algorithms, software design, and machine learning. During summers, he interned at a

fintech startup and a nonprofit tech organization, gaining hands-on experience. Post-graduation, he

pursued certifications in cloud computing (AWS, Azure) and completed a Coursera specialization in

AI ethics. He regularly attends webinars and workshops to stay ahead of industry trends, believing

formal education alone isn’t enough in tech’s fast-paced evolution.

Creating 1 million "Syns"

After running some prototyping runs on 1,000 and 10,000 sized scales, we scaled up to 1,000,000 synthetic humans. This was a large run, generating around 2.4 billion tokens of reasoning responses. As a quick plug: large-scale and efficient LLM inference workloads are exactly what we excel at (hint: it didn't cost as much as you might think). Feel free to reach out if you have similar workload needs!

The Dataset

The resulting dataset is open-source, free, and licensed for commercial reuse. You can download it here from HuggingFace: https://huggingface.co/datasets/skysight-inc/synthetic-humans-1m.

We created this dataset largely for demonstration purposes, and to highlight a new methodology for seeding LLM synthetic data. If you train LLMs yourself and would like to use the dataset to generate synthetic data (or have any needs around synthetic data), we would love to hear from you!

We are particularly interested in using it for analytical purposes with applications in marketing, sociology, media, and politics. We will soon follow up with another blog post that delves into such use cases.

Limitations and Disclaimers

The sampling we did was extremely simplistic, varying only a handful of attributes. In future versions, we want to extend this to many more. We also did kept the attributes independent (which is a bad statistical assumption), and it yields funky anomalies (we came across a few 18 year-old physicians for example).

We also identified our humans with syn-<id> identifiers, rather than giving them names. However, the model often liked to create names for the humans, such as Emily, Jane, or John. Instead of supressing this, we let it get creative. It should go without saying that any associations to real humans in our dataset are merely coincidental.

Get in Touch

If you have similar batch inference or custom synthetic data needs - email us at team@skysight.inc. We are also hiring engineers and researchers to help us optimize large-scale inference systems. If you have an interest in discussing job opportunities with us, send an email to jobs@skysight.inc.